What Is Load Balancing? Popular Load Balancing Technologies

What is load balancing? It is a critical technology that distributes traffic and workloads across multiple servers to ensure stability, high availability, and optimal performance. By preventing overload and reducing latency, load balancing supports scalable and resilient infrastructures. Join Axclusive ISP to learn more in this article.

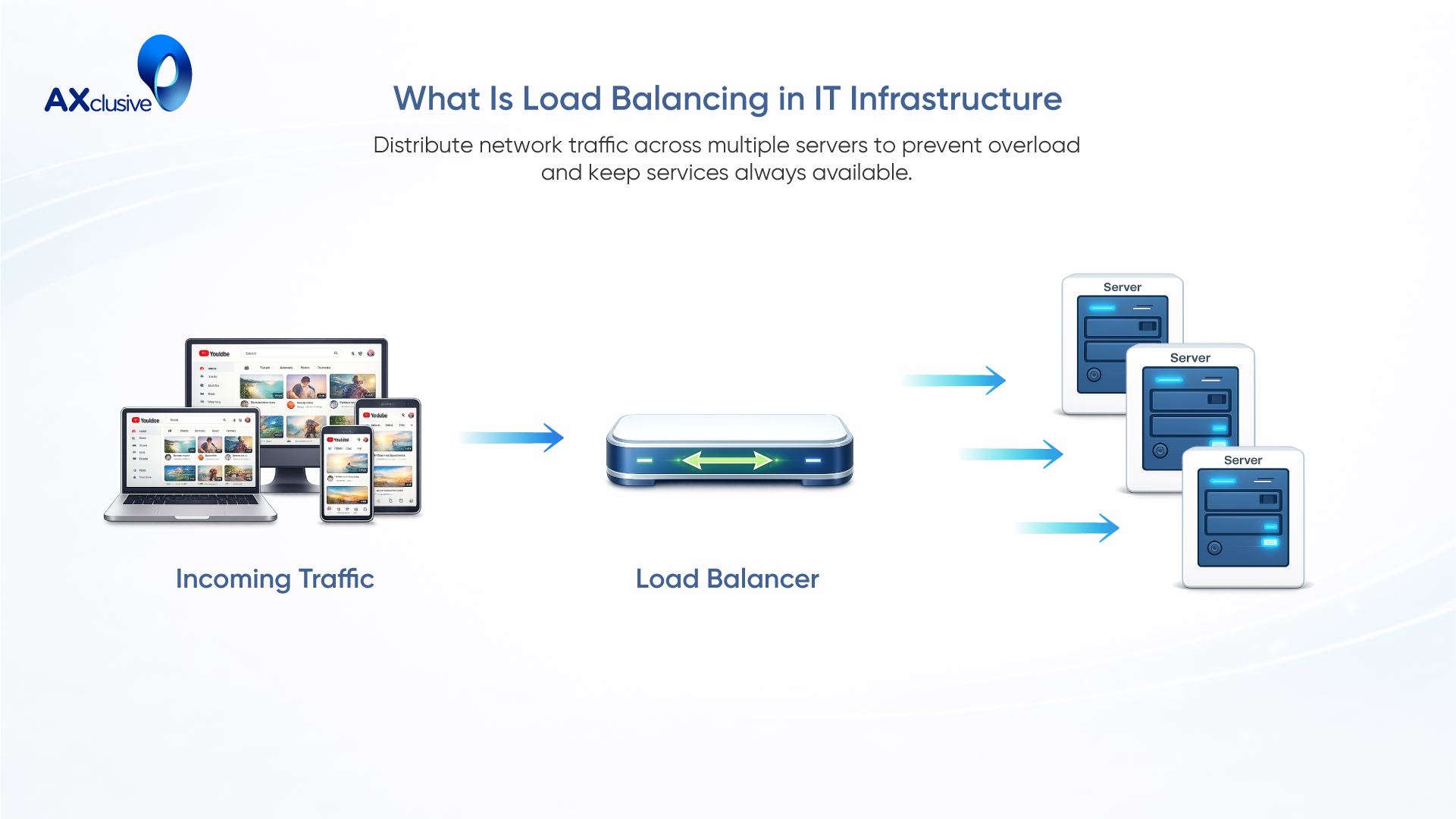

What Is Load Balancing in IT Infrastructure?

Load balancing is a core IT technique used to distribute computational workloads across two or more computers or servers. In internet and networking environments, load balancing directs incoming network traffic to multiple backend systems instead of a single server. This approach prevents overload, ensures stable operation, and maintains consistent service availability. By sharing traffic evenly, load balancing reduces latency, improves response times, and increases system efficiency. For modern internet applications, load balancing is a fundamental requirement that enables reliable performance and uninterrupted access for users.

Benefits of Load Balancing for Business Systems

Load balancing plays a critical role in maintaining reliable and high-performing digital systems. Users expect fast access to information and uninterrupted transaction processing at all times. When applications respond slowly, behave inconsistently, or fail during peak usage, users lose trust and may abandon the service. Load balancing addresses this risk by controlling how workloads are distributed across servers, ensuring systems remain stable even when demand increases suddenly.

Improved application availability

Load balancing helps keep applications accessible for both internal teams and external users. When traffic is shared across multiple servers, no single system becomes a point of failure. If one server slows down or stops responding, traffic is automatically redirected to healthy systems. This reduces downtime, protects business operations, and ensures users can continue accessing services without interruption.

Scalable application growth

Modern applications must handle unpredictable traffic patterns. For example, an online ticket platform may experience a sudden surge when sales open. With load balancing in place, traffic is spread across multiple computing resources instead of overwhelming one server. This allows businesses to scale capacity during peak demand and serve more users without degrading performance or requiring major infrastructure changes.

Stronger security posture

Load balancing supports security by limiting how much traffic any single system receives. Distributing requests across backend servers reduces exposure to resource exhaustion and makes it harder for attackers to disrupt services. Load balancers can also isolate compromised systems by redirecting traffic elsewhere. In addition, many load balancing solutions provide built-in protection against distributed denial-of-service (DDoS) attacks by absorbing and rerouting malicious traffic.

Consistent application performance

By improving availability, scalability, and security, load balancing directly enhances application performance. Requests are processed faster, response times remain stable, and systems continue to operate under heavy load. This ensures applications function as intended and deliver a reliable experience that users and customers can depend on.

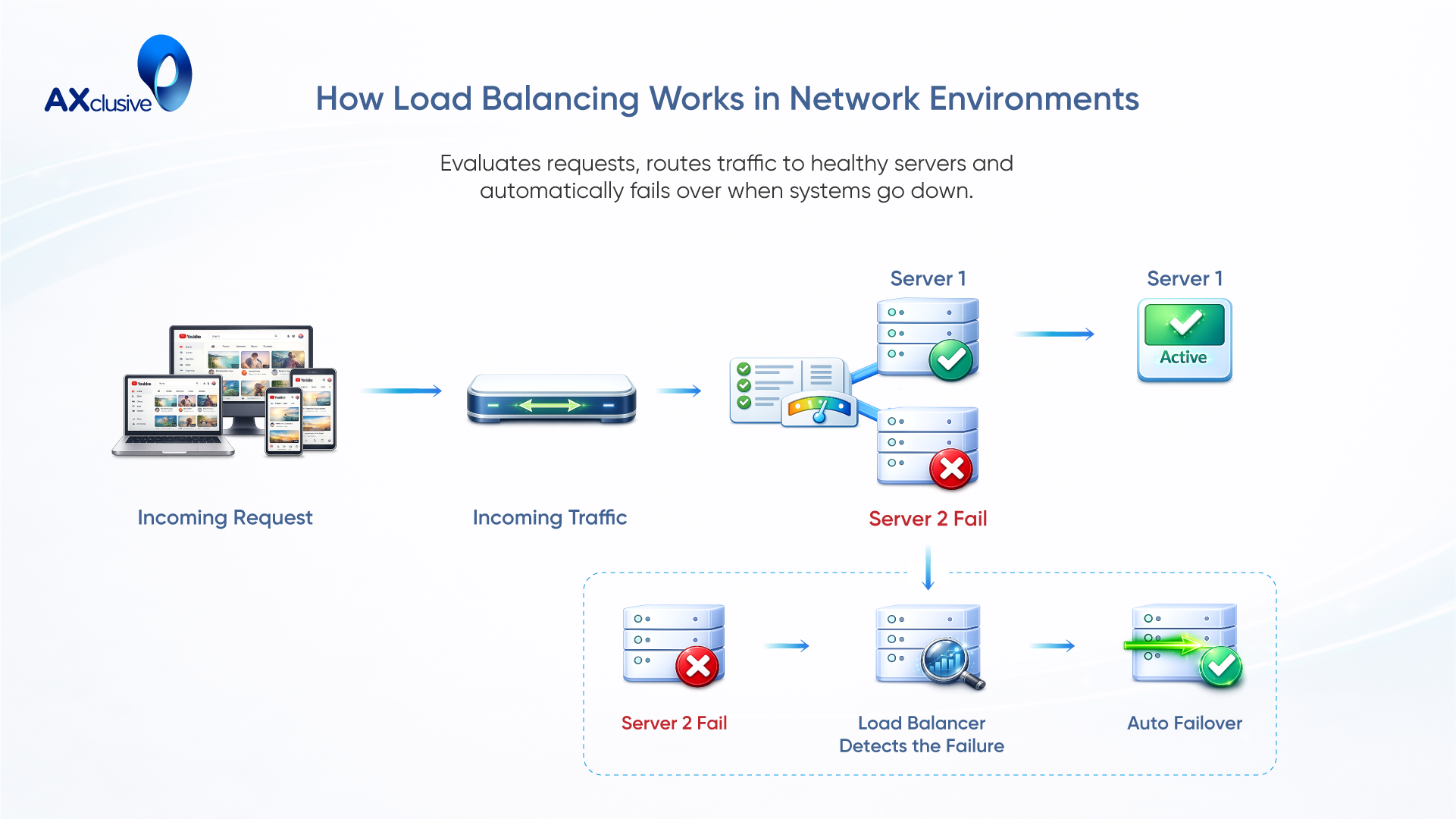

How Load Balancing Works in Network Environments

Load balancing operates by evaluating incoming user requests and directing them to an appropriate backend server using predefined rules or real-time conditions. This distribution can follow fixed logic or adjust dynamically based on server availability and capacity. Each request is sent only to systems that are able to process it at that moment. When a server becomes unavailable or fails to respond, the load balancer automatically removes it from service and routes traffic to other active servers. This mechanism ensures continuous service delivery, maintains system reliability, and prevents disruption caused by single points of failure.

Load Balancing Deployment Models

Load balancers are deployed at different layers of the Open Systems Interconnection (OSI) model, depending on the level of traffic inspection and control required. While the OSI model consists of seven layers, load balancing is most commonly implemented at Layer 4 (Transport) and Layer 7 (Application), where it can directly influence how traffic is routed and processed by backend systems.

Cloud-Native Load Balancing Solutions

Cloud-native load balancing solutions represent a modern approach to what is load balancing in networking. These solutions are delivered as managed services within cloud platforms and are designed to scale automatically as demand changes. Instead of relying on physical devices, cloud-native load balancers operate as distributed software systems.A cloud-native load balancing service provides a virtual endpoint that users connect to. Backend resources such as virtual machines, containers, or services are registered behind this endpoint. This model supports virtual load balancing and removes the need to manage underlying hardware.

Cloud platforms support multiple routing methods. Application load balancing distributes requests based on application behavior and request data. This improves performance and supports efficient scaling. Global server load balancing routes users to the closest or best-performing geographic location, reducing latency for global audiences.

Load Balancer Architecture and Mechanisms

Layer 7 load balancers operate at the application layer and can inspect application-specific data, such as HTTP headers, URLs, cookies, SSL session identifiers, or request parameters. This deeper visibility allows for more intelligent routing decisions, often referred to as content-based switching.

By understanding the structure and intent of application requests, Layer 7 load balancers can route traffic based on business logic—for example, directing API calls to specific services or separating static and dynamic content across different server pools. This makes them particularly valuable for modern web applications, microservices architectures, and API-driven platforms.

Load Balancing Algorithms

A load balancing algorithm defines how incoming requests are distributed across available servers within a system. Each algorithm is designed to address specific operational requirements, such as simplicity, performance optimization, session persistence, or efficient resource utilization. Selecting the right algorithm depends on application behavior, traffic patterns, and infrastructure capacity.

Below are some of the most commonly used load balancing algorithms and their practical use cases.

Sequential Distribution

Round robin is a straightforward approach that assigns requests to servers in a fixed, sequential order. Each new request is forwarded to the next server in the rotation, ensuring an even distribution over time. Because this method does not account for server load or performance differences, it is best suited for environments where backend servers have similar capacity and workloads.

Weighted Distribution

Weighted round robin enhances the basic round robin model by assigning a relative weight to each server. Servers with higher capacity or better performance are given higher weights, allowing them to receive a larger share of incoming traffic. This approach enables administrators to align traffic distribution with real-world resource capabilities, making it effective in heterogeneous environments where servers differ in processing power.

IP-Based Routing

IP-based routing uses a deterministic calculation based on the client’s IP address to select a backend server. By applying a hashing function to the source IP, the load balancer consistently maps requests from the same client to the same server. This method is particularly useful for applications that require session persistence, as it helps maintain continuity without relying on shared session storage.

Connection-Based Routing

The least connections algorithm directs new requests to the server with the fewest active connections at the time of arrival. By considering current connection counts, this method dynamically adapts to changing workloads and prevents individual servers from becoming overloaded. It is well-suited for applications where sessions vary in duration or resource consumption.

Response-Time Routing

Least response time combines real-time performance metrics with connection awareness. The load balancer evaluates both the number of active connections and each server’s average response time, then routes traffic to the server that can respond most efficiently. This algorithm is particularly effective in performance-sensitive environments where minimizing latency is a priority.

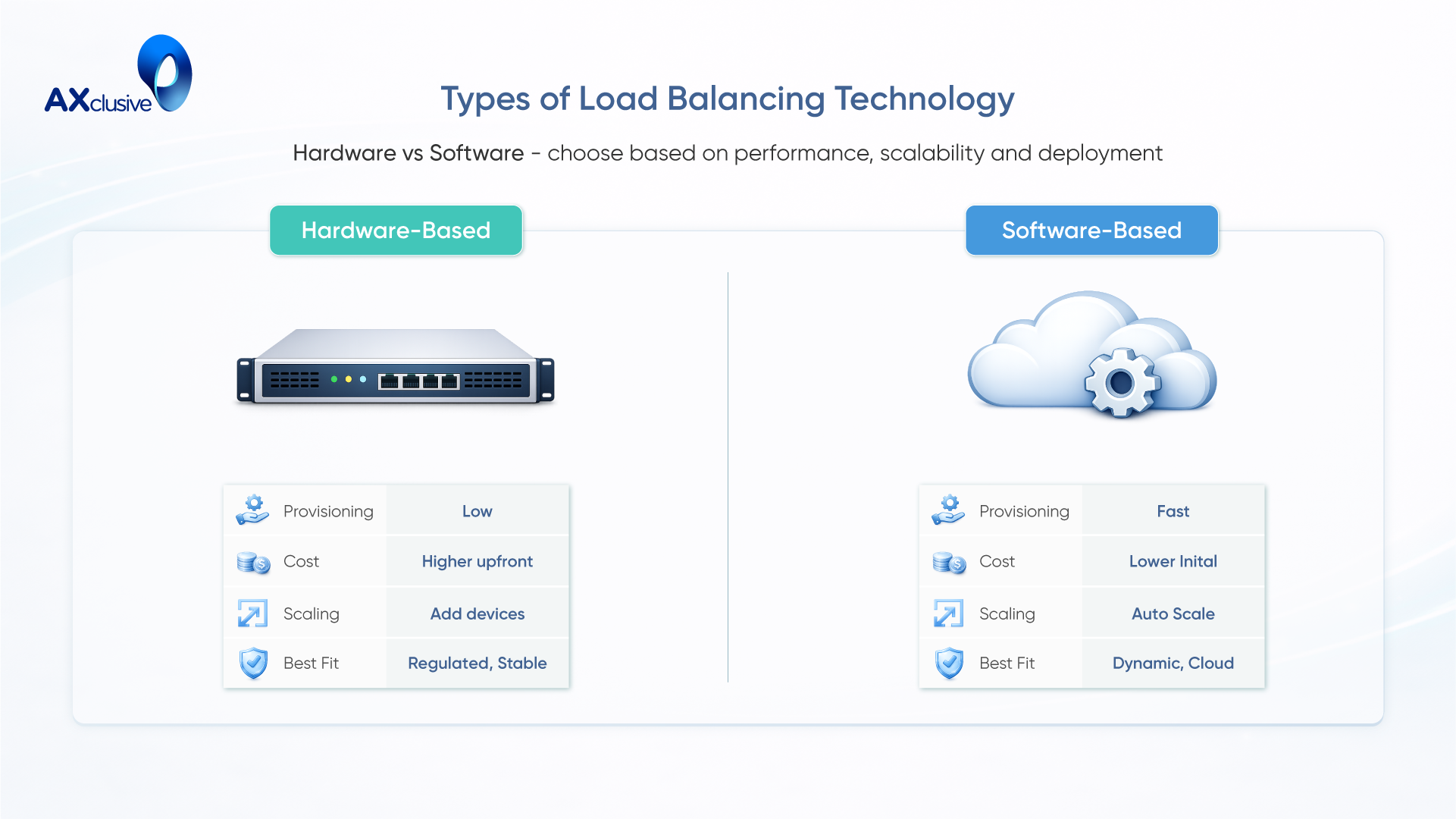

Types of Load Balancing Technology

Load balancing technologies are generally categorized into hardware-based and software-based solutions. Each type addresses different operational needs and infrastructure models, and the choice between them depends on performance requirements, scalability expectations, and deployment environments.

Hardware-Based Balancing

Hardware load balancers are dedicated physical appliances engineered to manage high volumes of network traffic with consistent performance and low latency. Deployed within on-premises data centers, these devices are optimized at both the hardware and operating system levels to efficiently inspect, route, and secure traffic across large server pools.

Many hardware solutions support virtualization, allowing multiple logical load balancers to operate on a single physical device. This enables centralized management while maintaining strict performance isolation. Hardware load balancers are commonly used in environments where predictable throughput, regulatory control, and long-term infrastructure stability are critical.

Software-Based Load Balancing

Software load balancers deliver the same core traffic distribution functions through software applications rather than physical devices. They can be deployed on standard servers, virtual machines, containers, or consumed as fully managed services in cloud platforms.

This approach offers significant flexibility. Software-based solutions can be rapidly provisioned, updated, and scaled as demand changes, making them well suited for dynamic workloads and modern application architectures. Their ability to integrate seamlessly with cloud-native ecosystems makes them a preferred choice for organizations adopting DevOps, microservices, and hybrid or multi-cloud strategies.

Comparison of hardware balancers to software load balancers

Hardware load balancers typically involve higher upfront costs, including procurement, configuration, and ongoing maintenance. They are often sized to accommodate peak traffic scenarios, which can lead to underutilized capacity during normal operations. Scaling beyond the original design usually requires purchasing and deploying additional devices, introducing time and operational overhead.

Software-based load balancers, by contrast, are designed for elastic scalability. They can automatically scale up or down in response to real-time traffic conditions, ensuring resources are used efficiently. From a cost perspective, they generally require less initial investment and offer lower long-term operational expenses, particularly in cloud environments where consumption-based pricing models apply.

FAQs

How do static and dynamic load balancing algorithms differ?

Static load balancing uses predefined rules to distribute traffic and does not change based on real-time conditions. Dynamic load balancing adjusts traffic distribution based on server health, current load, and response times to ensure better efficiency.Load balancers perform regular health checks by sending requests to backend servers. If a server fails to respond correctly, it is marked unavailable and removed from traffic rotation.

What occurs when a backend server becomes unavailable?

When a backend server goes offline, the load balancer stops sending traffic to it and redirects requests to other healthy servers. This prevents service disruption and maintains availability.

Why does load balancing increase application performance?

Load balancing spreads traffic across multiple servers, reducing overload on any single system. This results in faster response times, lower latency, and more consistent performance.

Which load balancing approaches are most widely used?

The most common approaches include Layer 4 and Layer 7 load balancing, DNS-based load balancing, and cloud-native load balancing services.

How does global server load balancing (GSLB) operate?

Global server load balancing routes users to the closest or best-performing geographic location. It uses factors such as latency, availability, and location to optimize traffic delivery.

How does load balancing improve the overall user experience?

Load balancing ensures faster access, stable performance, and minimal downtime. Users experience fewer errors and consistent service, even during peak traffic periods.

Load balancing plays a vital role in maintaining stable, scalable, and high-performance IT environments. As application complexity and user demand continue to grow, intelligently distributing traffic becomes essential for ensuring uptime, consistent performance, and reliable user experiences. By improving response times and reducing operational risk, load balancing remains a key element of modern digital infrastructure.

Through this article, Axclusive introduces load balancing as a foundational approach to building resilient network and application architectures. The discussion highlights not only traffic distribution, but also performance optimization, scalability planning, and efficient infrastructure design—helping organizations better understand why load balancing is essential in today’s connected environments.

Back to blog